Predicting 3D Action Target from 2D Egocentric Vision for HRI

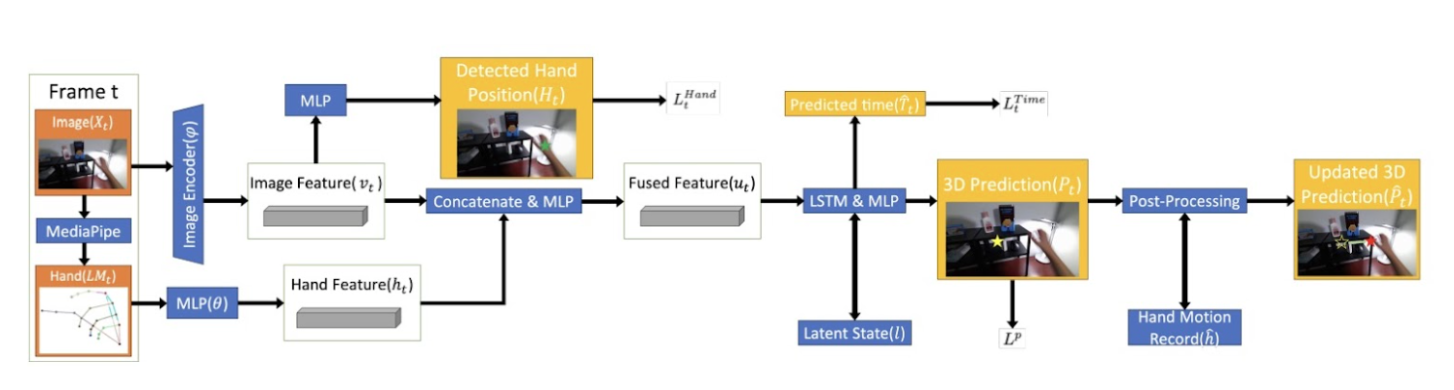

Improved safety and efficiency in Human Robot Interaction (HRI) with a 3D human action target prediction algorithm from 2D egocentric vision, implemented real-world HRI demonstrations on a UR10e robot. (paper accepted by ICRA 2024)

Can first-order methods achieve the same performance as (established) second-order methods when used at high frequency in MPC?

Key words: Machine learning, HRI, Real-world Scenarios, Obstacle Avoidance, Human action prediction

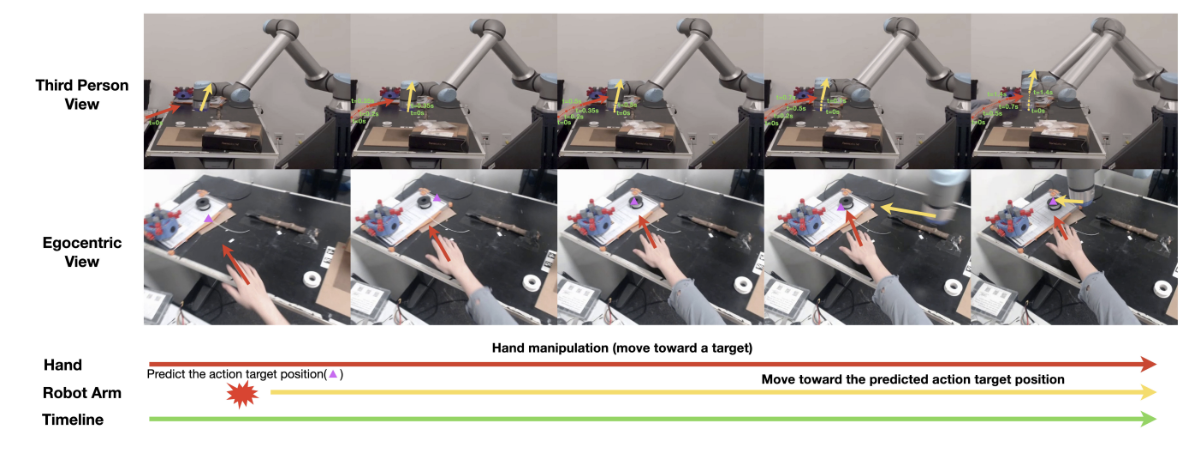

- Proposed HRI demonstrations, showcasing real-world scenarios where a human and a robot share a common workspace.

- Developed an obstacle avoidance controller using DDP with customized soft constraints to avoid the predicted human action target.

- Integrated the algorithm with controllers for obstacle avoidance and reaching, then successfully deployed the combined system on a UR10e for real-world human-robot interaction (HRI) demonstrations.